Hi! I'm

Daniel Faronbi

Hi! I'm

I'm a Music Technology Researcher, Jazz Pianist, and Music Producer. My research involves using signal processing and machine learning techniques for the generation and analysis of music and other audio signals. This includes research in areas like Generative Audio Models, Music Information Retrieval, Computational Musicology, and more. I do my work as a PhD student in the Music and Audio Research Lab (MARL) as a Steinhardt Fellow. For many years, I have been a dedicated student in both the arts and STEM, with degrees in Music Technology and Computer Engineering. My work aims to bridge these two schools of thought to create something unique.

The embedding spaces generated by neural audio compression models are very compressed and output high quality audio. However, the space is very hard to interpret. We propose a method for forcing interpretability on this embedding space and use it to generate music modularly by combining models that were trained separately. To accomplish this task, we propose to train a model to disentangle music features from RVQ tokens generated by neural audio compression models. We will be able to alter the content of the generated signal by changing the music features and converting back to the compressed space. This will allow for another axis of control.

Sound+Science is an innovative after-school program, research lab, and community in NYC for high school students interested in exploring the unique and deep connection between music, science, and technology. Through a unique curriculum, participants will explore modules like ‘The Hidden Codes in Electronic Music’, ‘From Hip-Hop to AI’, and Music Improvisation and the Evolution of the Mathematics of Computation Sound+Science, revealing the profound connections between music, technology, physics and mathematics.

Read More

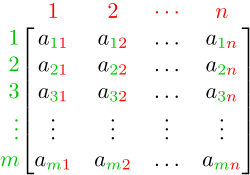

The matrix exponential is a cornerstone operation in computational mathematics, with applications spanning control theory, quantum mechanics, and modern machine learning. While Padé approximants combined with scaling and squaring have long been the standard for its computation, recent advances in Taylor-based methods have demonstrated not only superior accuracy but also enhanced computational efficiency. In this work, we introduce an accelerated implementation of the matrix exponential based on high-order Taylor series, employing advanced polynomial evaluation techniques that surpass the classical Paterson–Stockmeyer scheme. We tailor the algorithm and its implementation to a concrete generative AI scenario with a predefined error tolerance. By optimizing the polynomial evaluation sequence and exploiting sparsity and low-rank structure.

Advances in deep learning and audio signal processing have given machines the abilty to listen and understand a variety of real world events. Malicous actors can use these methods to extract important information from users. One way this happens is with models that can listen to keyboard typing and extract the keys pressed to steal sensitive information like passwords from users. We devise a new method to foil these types of attacks on users.

Professional mixing and mastering of recorded audio is a challenging task. The process involves creating a mix that performs well across various listening environments, which can be quite cumbersome. With existing tools, audio engineers must render the audio, test it in different settings, and then remix it. This iterative process continues until the audio track is completed. The introduction of 3D spatial audio has further complicated matters, resulting in even more complex and varied mixes due to different speaker configurations. To address this, we propose an application that enables users to stream their mix in spatial audio to another location: MixShare. By doing so, the mix can be evaluated simultaneously in multiple listening environments without the need for rendering and re-mixing.

Read More

AFRINUM aims at renewing the discourse about innovation in Western African societies through the lens of the popular music industry. We consider digital technology as a globalized tool vs a cultural artifact to reflect upon the many specificities that this tool takes and the values to which it refers. This digital music culture will be investigated within a large range of social, economic and political contexts to which this culture participates meanwhile contributes to transforming, producing or reifying.

Read More

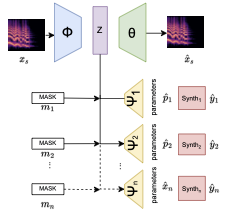

Automatic Synthesizer Programming is the task of transforming an audio signal that was generated from a virtual instrument, into the parameters of a sound synthesizer that would generate this signal. In the past, this could only be done for one virtual instrument. In this paper, we expand the current literature by exploring approaches to automatic synthesizer programming for multiple virtual instruments. Two different approaches to multi-task automatic synthesizer programming are presented. We find that the joint-decoder approach performs best. We also evaluate the performance of this model for different timbre instruments and different latent dimension sizes.

Read More

Synth 3D is a VST sound synthesizer that allows users to place virtual oscillator objects in 3D space. This is accomplished by convolving oscillator signals with Head Related Impulse Responses (HRIRs) from the Spacial Audio for Domestic Interactive Entertainment Project (SADIE). The synthesizer comes with standard subtractive synthesis features. This includes three oscillators which are all individually convolved with an HRIR from SADIE, two filters, and ADSR envelopes for the filters and amplifier. Synth 3D is available as a VST3 synthesizer and Audio Units component for both Windows and Mac. The software was implemented using the JUCE framework and all of the code is open source and available to view on Github.

Read More

Developed by Dr. Brian McFee, Librosa is a python package for music and audio analysis. It provides the building blocks necessary to create music information retrieval systems. Version 0.9 has expanded the library's API to provide seamless processing of multi channel audio signals.

Read More

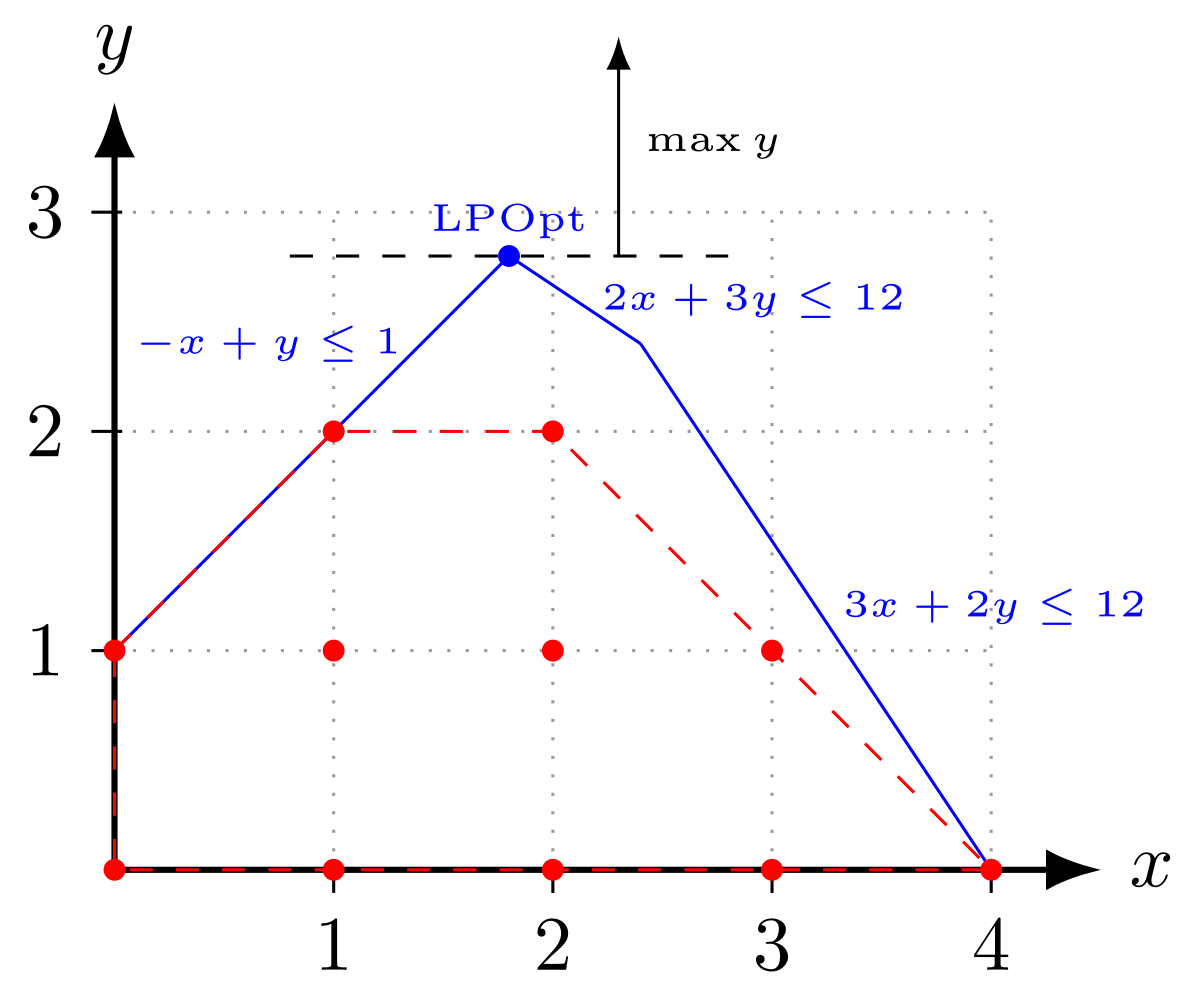

This is an optimization research project focussed on novel approaches to linear program solvers. It was done in the University of Nebraska Omaha's Data and Decisions Sciences Laboratory under the supervision of Dr. Fabio Torres Vitor. The project focuses on adding multidimensional searches into the traditional interior point method algorithm.

Read More

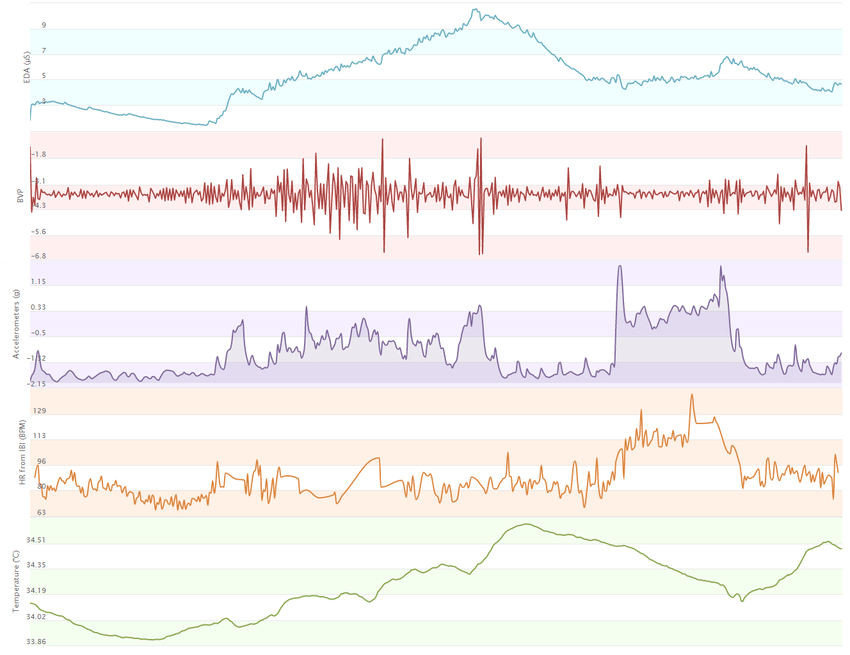

Research on wearble computing done with the Embedded & Pervaisve Systems Lab at Washington State University under the direction of Dr. Hassan Ghasemzadeh. The goal was to use biometric signal data to predict the likelood of alchohol relapse. Participants wore Empatica's E4 wristband while performing regular tasks. The signal data was analyzed for correlation with alchohol cravings and binary classification models were developed to predict the likelood of giving in to these cravings.

Read More

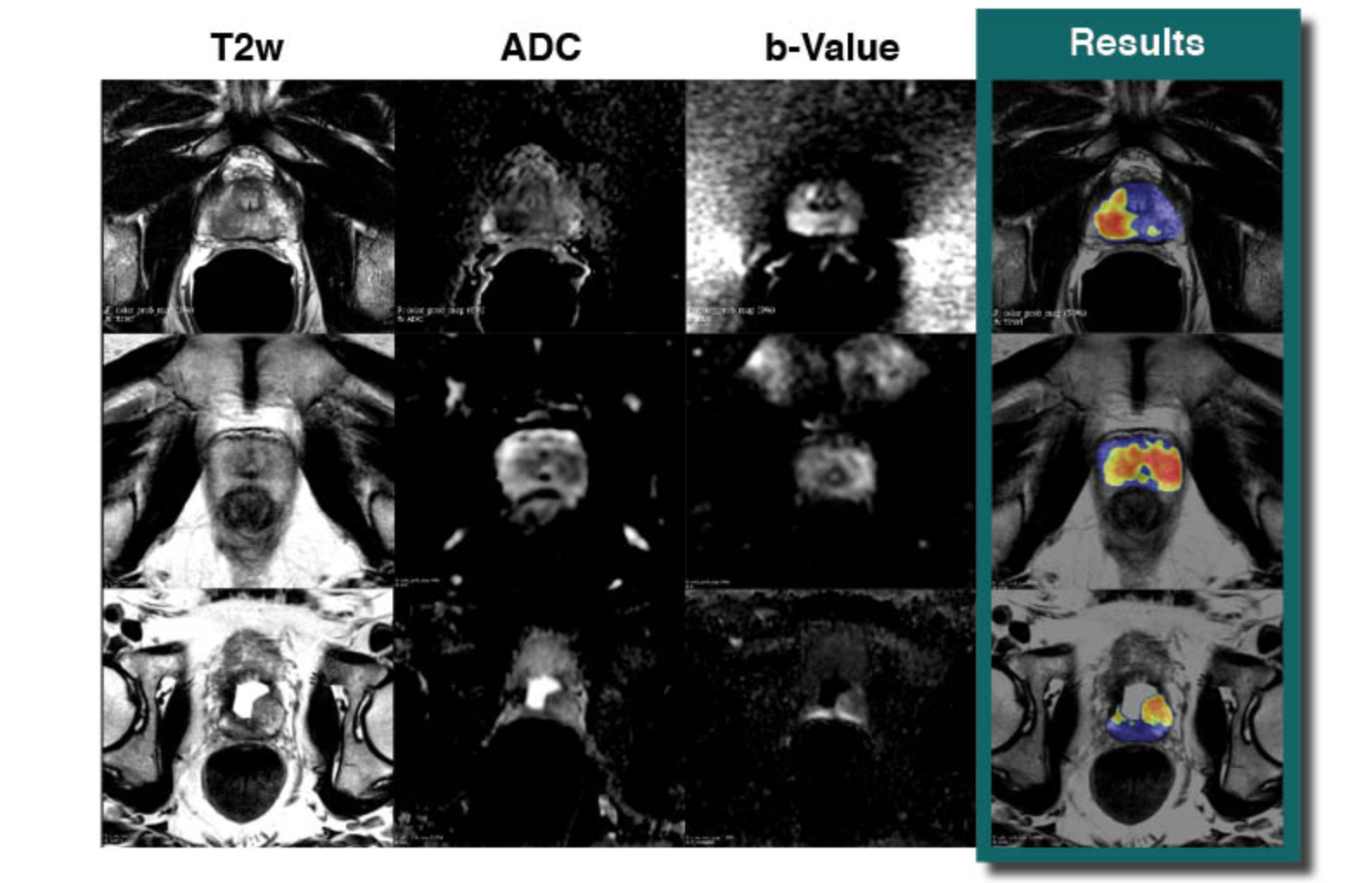

ProstatID™ is a software interface for post-processing MRI using cloud based computing, physician workstation integration, and AI image interpretation to provide highly accurate prostate cancer diagnostics.

Read MoreThe Wi-Fi Synthesizer project was conceptualized as a device to read midi input and generate audio waveforms based on the information provided by the midi signal. The device would also transfer the midi signal received via Wi-Fi to a website by a http request. The midi component of the project was not included in the final application because of time restraints, but the circuitry was still included in the design. The final application outputs a 100hz audio signal with a user selected wave shape (selected from four options). The device will send data to a website indicating which waveform was last selected.

Read MoreThe objective of this project was to give students real world experience with building a microprocessor system. The microprocessor system is based on Intel's 8051 microcontroller and performs a variety of memory functions to read, edit, move, and find contents in ram. It also interfaces with a variety of IO devices such as an LCD display, a real time clock, a seven segment display, and an analog to digital converter.

Read More